In recent years, the rise of artificial intelligence has sparked heated debates across numerous industries, with many professionals anxiously asking, “Will AI take my job?” As AI technology continues to advance at a rapid pace, questions about how to use AI at work ethically, why people hate AI, and whether AI is fundamentally unethical have become increasingly urgent. This article presents a brutally honest discussion from Rob and Adam of Opace Digital, a UK-based AI agency, who tackle these contentious topics with refreshing candour.

Source – Research by EY revealed that 75% of employees are concerned AI will make certain jobs obsolete, and 65% are anxious about AI replacing their own jobs

The Love-Hate Relationship with AI Technologies

The conversation around AI often begins with strong emotions. As one digital creator, Embry, expressed in a discussion with Rob and Adam: “I LOATHE the idea of AI Art and such, but I can’t help but periodically wonder if I would use it for some basic conceptual crap that I just don’t have time to do. Like voice lines and such, or helping flesh out a little bit of a written scene here and there.”

This sentiment captures the internal conflict many creators face – standing by their principles while recognising potential practical applications that could benefit their workflow. This dichotomy lies at the heart of the debate around “how to use AI at work”: ethical concerns versus practical utility.

Will AI Take My Job? The Primary Concerns with AI Implementation

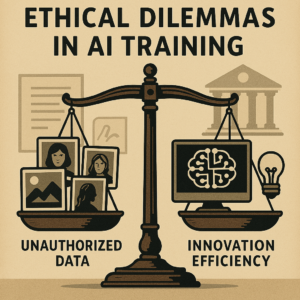

Rob, identified as ScregnØg in the conversation, offered a thoughtful analysis highlighting two main issues with current AI systems that fuel the “will AI take my job” anxiety:

- Rights and consent in training data: “A lot of these models have been trained on data that they didn’t have the rights for. Data that the owner of may well have refused to be used for training at all, were they given the chance.” This ethical concern centres on how AI models are developed and whether artists’ work was appropriated without proper consent or compensation. However, Rob noted this is “a solvable problem which will result in ethically trained models eventually becoming dominant. This will take time, but it’ll happen through regulation if nothing else.”

- Job displacement risks: “Jobs are definitely at risk. There are weird things that these models can’t do yet, things you wouldn’t expect considering the crazy stuff they can do, but this will change with time.” Rob pointed to examples of past limitations that have since been resolved: “Remember the ‘too many fingers’ stuff last year, well that was solved in time.” This progression suggests that technical barriers preventing wider AI adoption are gradually falling away.

Rob continued with a sobering assessment of the business reality: “We’re going to reach a point where from the perspective of a business, why hire an employee full time when you can just get a cheap and easy AI to perform the same task for next to nothing? The business cares about the result, and most businesses care about expense, so therefore AI will undoubtedly result in people losing work.”

This frank acknowledgment directly addresses the “will AI take my job” question that concerns so many workers across various industries. It’s not just creative fields that face disruption – AI’s capabilities continue to expand into areas previously thought safe from automation.

How to Use AI at Work: Adapting Rather Than Resisting

Despite these concerns, Rob advocated for a pragmatic approach to AI adoption:

“So, knowing it’s here, what do you do? You could shun it, refuse to go anywhere near it, stand on principle and hate it with every fibre of your being, that is an option, but one that will likely just result in issue 2 above. Losing out on employment that you yourself could have done.”

Instead, he suggested integration as the answer to “how to use AI at work”: “Dabble with it. Work out what it’s good at and what it’s not. Find parts of your workflow that it can bolster, make it another tool in your toolkit for what you do.”

This approach suggests that rather than allowing AI to replace creative professionals, individuals should “make yourself useful by knowing how best to utilise it to deliver better and faster results than you could have done previously.” In his words, “SECURE DOMINANCE OVER THE AI!”

This strategy offers a practical response to the question “will AI take my job?” By becoming proficient with AI tools, professionals can position themselves as value-adding intermediaries rather than replaceable components.

Why Do People Hate AI? Emotional Reactions Versus Practical Reality

Adam, the other participant in the discussion, critiqued what he saw as emotionally-driven resistance to technological change, addressing the question of “why do people hate AI”:

“It’s like people are led with emotion these days, not logic. It’s like before even thinking about stuff objectively and critically, they just wanna jump on a bandwagon of being offended and being, like, associated with the other little people that are offended at the same thing so they can all live, like, happy little lives being hateful towards everything that they don’t like. It’s really stupid.”

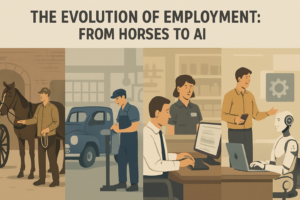

Adam drew a historical parallel to illustrate his point about why some people hate AI: “With this mentality, we’d still be riding horses rather than driving cars. You know, it’s like, ‘Oh, these cars put stable hands at risk of losing their jobs.'”

This comparison highlights a recurring pattern throughout history – initial resistance to technological advancements that eventually become commonplace. It suggests that much of the hostility toward AI stems from fear of change rather than rational assessment of its potential benefits and drawbacks.

Is AI Unethical? Examining Contradictions in Anti-AI Arguments

Adam further probed the question “is AI unethical?” by pointing out what he perceived as contradictions in the positions of AI critics:

“It cracks me up. You see people contradicting themselves. All these people, right, that are saying, ‘Oh, AI is gonna take people’s jobs,’ they’ll happily still pirate content. They’ll do stuff behind the scenes. And it’s like, well, what about the people’s jobs that you’re violating by infringing on the copyright? They’re not about it. It’s stupid.”

This observation highlights an important dimension of the “is AI unethical” debate – the selective application of ethical concerns. While some may criticise AI on ethical grounds, they may simultaneously engage in other behaviours that raise similar ethical questions about creator rights and compensation.

The Inevitability of Technological Advancement

A recurring theme in the discussion was the inevitability of technological progress and the need for adaptation, directly addressing why fighting against AI might be futile:

“You can’t just stay static,” Adam argued. “Everything changes, and if you just stand around shaking a stick at what’s happening, you’re just gonna get left behind, and then you’re gonna have to reluctantly crawl back to what’s happening anyway.”

This sentiment was reinforced through a vivid metaphor: “It’s like a kid in a supermarket that’s throwing a tantrum and standing their feet. The parents are slowly walking away. The kid’s standing there getting further and further behind. And eventually the kid reluctantly will just kind of stomp his feet and run and catch up. That’s what all these nerds will do. This is what all these people would do eventually when it’s undeniable that it has to be used.”

This perspective suggests that regardless of current resistance, AI integration is inevitable, and the question of “how to use AI at work” will eventually become relevant for virtually everyone.

Consumer Behaviour and Market Realities

The discussion also touched on how most consumers prioritise utility over principle, which helps explain why businesses will continue to adopt AI despite ethical concerns:

“The majority of people, when it comes to markets and buying products or services, they don’t give a fuck. The majority of people just genuinely don’t give a fuck. They’ll just buy whatever they want from companies,” Adam observed.

He continued: “You’d get the outrage online. Ultimately, though, people would just buy it and not be bothered because it’s the product that people care about.”

This observation suggests that despite vocal opposition to certain technologies or companies, market forces often prevail as most consumers make decisions based on convenience, price, and utility rather than ethical concerns. This reality drives business adoption of AI, further pressuring individuals to adapt or risk being left behind.

Why Do People Hate AI? The Psychology of Contrarianism

Adam offered additional insights into why people hate AI, suggesting that some opposition stems from a desire to appear contrarian or insightful:

“It’s cool and trendy to jump on these hate kind of trains now, isn’t it? It’s cool to be a market corrector. It’s like if you see a bunch of people liking something, ‘Oh, we have to bring it down.’ Or even just contrarians that just want to seem like they’ve got insight over the matter. And I think a lot of people are just negative, hateful people as well.”

This perspective frames some AI opposition as a form of social positioning rather than genuine ethical concern, offering another dimension to understanding “why do people hate AI.” It suggests that some criticism may be motivated more by social dynamics than by substantive concerns about the technology itself.

How to Use AI at Work: The Need for Adaptability in a Changing World

The conversation concluded with reflections on individual responsibility to adapt to technological change, offering practical guidance on how to approach AI in the workplace:

“If it costs people’s jobs, then you know what? Jobs have to be adapting. You have to adapt. You have to find opening in the changing landscape of the world. Like, when it comes to work and technology, you have to be malleable,” Adam stated firmly.

This perspective places responsibility on individuals to evolve with technological advancements rather than expecting the world to remain static: “You have to find a slot within a changing world for you, and you have to adapt. The world doesn’t need to adapt to you as a person.”

Adam criticised what he perceived as entitlement in some attitudes toward technological change: “That’s the kind of outlook that a lot of the young people have. ‘You will refer to me by they, them. You will do this and that. You will ignore AI and allow me to sculpt this world to my…’ I don’t know where it comes from, spoiled upbringings.”

This somewhat provocative commentary highlights a fundamental tension in discussions about “how to use AI at work” – whether adaptation should primarily come from individuals or from systems and institutions.

Will AI Take My Job? A Nuanced Approach to AI Integration

As AI technology continues to evolve, the question “will AI take my job?” remains complex. The discussion between Rob and Adam reveals several possible approaches:

- Complete rejection: Refusing to engage with AI on principle, which both participants viewed as ultimately self-defeating

- Selective adoption: Using AI for specific tasks while maintaining creative control

- Full integration: Embracing AI as a collaborative tool to enhance human creativity and productivity

For many professionals concerned about whether AI will take their job, the middle path of selective adoption offers a compromise between ethical concerns and practical benefits. By understanding AI’s capabilities and limitations, workers can maintain their distinctive skills while leveraging new tools to enhance specific aspects of their workflow.

As Rob summarised: “Instead of letting it eventually replace you, make yourself useful by knowing how best to utilise it to deliver better and faster results than you could have done previously.”

Is AI Unethical? Balancing Innovation with Responsibility

The question “is AI unethical?” emerged throughout the discussion as a complex issue without simple answers. While both participants acknowledged legitimate ethical concerns around data rights and job displacement, they also suggested that ethical frameworks could evolve alongside the technology itself.

Rob’s observation that ethical training data issues are “a solvable problem which will result in ethically trained models eventually becoming dominant” through “regulation if nothing else” suggests that ethical concerns, while valid, need not permanently impede technological progress.

This nuanced perspective suggests that rather than asking “is AI unethical?” in absolute terms, a more productive approach might involve asking how AI can be developed and deployed ethically, with appropriate guardrails and considerations.

Why Do People Hate AI? Beyond Fear to Constructive Engagement

Throughout the discussion, various explanations for why people hate AI emerged:

- Fear of job loss and economic insecurity

- Concerns about exploitation of creative work

- Resistance to change and technological disruption

- Social and identity-based opposition

- Contrarianism and performative criticism

Understanding these various motivations for why people hate AI can help foster more productive conversations about its development and implementation. By acknowledging legitimate concerns while challenging knee-jerk opposition, stakeholders can work toward more thoughtful integration of AI technologies.

How to Use AI at Work: Practical Strategies for Professionals

Based on the discussion between Rob and Adam, several practical strategies emerge for professionals wondering how to use AI at work:

- Experimentation and learning: As Rob suggested, “Dabble with it. Work out what it’s good at and what it’s not.”

- Workflow integration: Identify specific tasks where AI can enhance rather than replace human work

- Skill complementarity: Develop skills that complement AI capabilities rather than compete with them

- Ethical awareness: Understand the ethical implications of AI use in your field

- Adaptability: Cultivate a mindset of continuous learning and adaptation

By approaching AI as a tool to be mastered rather than a threat to be feared, professionals can position themselves advantageously in an evolving workplace landscape.

Will AI Take My Job? The Broader Societal Perspective

While much of the discussion focused on individual adaptation, the question “will AI take my job?” also has broader societal dimensions. As Rob noted, from a business perspective, the economic incentives to replace human workers with AI are strong and growing stronger.

This reality suggests that even as individuals adapt, society may need to consider systemic responses to technological unemployment. Though not explicitly addressed in the conversation, these might include educational initiatives, social safety nets, or new economic models that account for changing patterns of work and productivity.

Is AI Unethical? The Role of Regulation and Governance

The question “is AI unethical?” points toward the importance of governance frameworks. Rob’s mention of regulation as a path toward ethically trained models highlights the role that policy can play in shaping AI development.

Effective governance might address concerns about training data rights, algorithmic bias, privacy, and other ethical dimensions of AI. Rather than leaving ethical considerations solely to market forces or individual conscience, thoughtful regulation could help ensure that AI development aligns with broader societal values.

How to Use AI at Work: Industry-Specific Considerations

While the discussion between Rob and Adam offered general principles for how to use AI at work, the specific applications and implications vary across industries. In creative fields, AI might serve as a conceptual tool or efficiency enhancer. In data-intensive domains, it might augment analytical capabilities. In customer service, it might handle routine inquiries while freeing human agents for more complex interactions.

Understanding industry-specific AI applications can help professionals develop targeted strategies for integration that preserve and enhance their value in the workplace.

Why Do People Hate AI? The Media Narrative

The discussion touched briefly on media portrayals and social dynamics that might contribute to why people hate AI. Adam’s observations about “bandwagons” and “hate trains” suggest that media narratives and social reinforcement can amplify opposition to new technologies.

This perspective highlights the importance of thoughtful, nuanced public discourse about AI—discourse that acknowledges both legitimate concerns and potential benefits without defaulting to either uncritical techno-optimism or reactionary technophobia.

Conclusion: Adaptation as the Path Forward

The conversation between Rob and Adam highlights a fundamental reality: technological progress continues regardless of individual resistance. Those who adapt their skills and workflows to incorporate new tools often find themselves better positioned for future opportunities.

Rather than viewing AI as either a complete threat or saviour, a nuanced approach recognises both its limitations and potential benefits. By engaging thoughtfully with these tools, professionals can help shape how AI is integrated into their fields rather than merely reacting to changes imposed upon them.

As Rob concluded: “So, that’s where we are, and whether we like it or not, the tech is here and here to stay, for better or worse etc etc.”

This realistic assessment suggests that the most productive approach to questions like “will AI take my job?”, “how to use AI at work”, “is AI unethical?”, and “why do people hate AI” involves neither uncritical acceptance nor blanket rejection, but rather thoughtful engagement that acknowledges both opportunities and challenges.

As industries continue to evolve alongside AI capabilities, the most resilient strategy appears to be one of informed adaptation—learning to work with new technologies while maintaining the human elements that make work meaningful and distinctive.

Transcription

The above article and podcast was created as a part of an internal conversation between Rob and Adam at Opace, transcribed below:

The Dilemma:

Y’know. I LOATHE the idea of Ai Art and such, but I can’t help but periodically wonder if I would use it for some basic conceptual crap that I just don’t have time to do. Like voice lines and such, or helping flesh out a little bit of a written scene here and there. Until I have the time and money to get them properly produced.

Sigh. But I do loathe Ai…

Rob:

Oooh I have much opinions on this matter!

I understand the disdain for the most part. There are 2 negatives about it all that I see:

• A lot of these models have been trained on data that they didn’t have the rights for. Data that the owner of may well have refused to be used for training at all, were they given the chance. This is a problem, but it’s a solvable one which will result in ethically trained models eventually becoming dominant. This will take time, but it’ll happen through regulation if nothing else.

• Jobs are definitely at risk. There are weird things that these models can’t do yet, things you wouldn’t even expect considering the crazy stuff they can do, but this will change with time. Remember the ‘too many fingers’ stuff last year, well that was solved in time. We’re going to reach a point where from the perspective of a business, why hire an employee full time when you can just get a cheap and easy Al to perform the same task for next to nothing? The business cares about the result, and most businesses care about expense, so therefore Al will undoubtedly result in people losing work.

So, that’s where we are, and whether we like it or not, the tech is here and here to stay, for better or worse etc etc.

So, knowing it’s here, what do you do? You could shun it, refuse to go anywhere near it, stand on principle and hate it with every fiber of your being, that is an option, but one that will likely just result in issue 2 above. Losing out on employment that you yourself could have done.

Orrrrrrrrrrr (if you hadn’t guessed, this is where I’m at), dabble with it. Work out what it’s good at and what it’s not. Find parts of your workflow that it can bolster, make it another tool in your toolkit for what you do. Instead of letting it eventually replace you, make yourself useful by knowing how best to utilise it to deliver better and faster results than you could have done previously. SECURE DOMINANCE OVER THE AI!

I appreciate that my stance there is almost entirely based on employment, and not on creative pursuits like hobbies etc. But even when it comes to hobbies I’ve been playing around with it, which is another story entirely.

Anyway. Where were we?

Adam –

Yeah, exactly. And it’s like people are led with emotion these days, not logic. It’s like before even thinking about stuff objectively and critically, they just wanna jump on a bandwagon of being offended and being, like, associated with the other little people that are offended at the same thing so they can all live, like, happy little lives being h- hateful towards everything that they don’t like. Um, it’s really stupid.

I, I’ve said to both of you, I think, on the phone at separate times, that, yeah, I mean, with this mentality, we’d still be, I’ve said today, yesterday, we’d still be riding horses rather than, like, driving cars. You know, it’s like, uh, “Oh, th- these cars put, uh, like, I don’t know, stable hands at, at risk of losing their jobs.” And, like, you know, uh, all the other stuff that’s to do with, uh, you know, the upkeep of the a- uh, of horses and look- looking after… I’m getting too deep into that example. But do you know what I mean? You know what I mean?

It’s like you can’t just stay static. Everything changes, and you s- and it’s overstated, but it’s like if you just stand around, like, shaking a stick at what’s happening, you’re just gonna get left behind, and then you’re gonna have to reluctantly crawl back to what’s happening anyway. Because, I mean, to look at it, like, you get the f- right? You get the f- it’s, it’s like with business and, and stuff like where, where, uh, and products, you get the few vocal people that hate, “Oh, no, boycott Amazon. They’re, like, unethical. They do this and that.” “Oh, boycott Tesla.” They, you know.

But ultimately, the majority of people, when it comes to, like, markets and, and buying products or services, they don’t give a f- like, the majority of people just genuinely don’t give a fuck. They’ll just buy whatever they want from companies. The, the… I mean, some… Don’t get me wrong. The- this, like, younger generation, it’s like they have a weird, like, brand allegiance that is just, like, strange. And I suppose a lot, you know, I mean, maybe it’s a young person thing, but, um, the majority of people would just… eh, you could be like Hitler that offers a product that no one else has done before. You’d get the outrage online. Ultimately, though, people would just, like, buy it and not be bothered because it’s the product that people care about.

Um, anyway, that’s a bit of a long-winded rant, and I’ve s- I haven’t said anything new. It’s just all the same s- same stuff we’ve already talked about before, but… I mean, I, I, I… Like, Rob, me and you have spoke, sp- spoke about this a lot as well. It’s like, I d- it’s cool and trendy to jump on these, like, um, hate kind of trains now, isn’t it? It’s like, “Oh, Trump’s done this, Trump’s done that.” And don’t get me wrong, some of it is, like, worthy of criticism, um, because no one’s perfect and, you know, what it is, what it is.

But it’s like, it’s cool to be a market corrector. It’s like if you see a bunch of people liking something, “Oh, we have to bring it down.” Or even just contrarians that just want to, like, s- seem like they’re, they’ve got insight over the matter. Um, and, and I think a lot of people are just negative, hateful people as well, so.

Um, anyway, um, it, it… I mean, it cracks me up. You see people contradicting themselves. Anyway, all these people, right, that are, like, saying, “Oh, AI is gonna take people’s jobs,” they’ll happily still pirate content. They’ll, they’ll do stuff behind the scenes. And it’s like, well, what about the people’s jobs that you’re violating by, you know, infringing on the copyright? They’re just, they’re not about it. It’s fucking stupid.

Um, I don’t know. Uh, so I was just moving around. I don’t know where I’m going with this, actually. I mean, I, like, I’m not adding anything to the conversation. I’m just saying that, like, people need to get with the times, um, and realize that A, like, like, s- I’m just echoing what you said, basically, Rob. Like, it’s not going anywhere. You, like, what are you gonna do? Stand…

It’s like a kid in a supermarket that’s throwing a tantrum and standing their feet. The parents are slowly walking away. The kid’s standing there getting further and further behind. And eventually the kid reluctantly will just kind of, like, you know, stomp his feet and run and catch up. That’s what all these nerds will do. Not, well, not even nerds. This is what all these, like, you know, people would do eventually when it’s undeniable that it, i- it has to be used.

If it c- if it costs people’s jobs, then you know what? Jobs have to be adapt, uh, adapting. You have to adapt. You have to find opening in the changing landscape of the world. Like, when it comes to work and technology, you have to be malleable. You have to make it work. You can’t demand that the whole world caves to your needs. And that’s the kind of, like, outlook that a lot of the young people have.

“You will refer to me by they, them. You will do this and that. You will, um, ignore AI and allow me to……s- sculpt this world to my…” I don’t know where it comes from, spoiled upbringings. I don’t, [laughs] I don’t know what it is, but, uh, you know, it’s… You have to find a, a slot within a changing world for you, and you have to adapt. The world doesn’t need to adapt to you as a person. That’s what they need to understand, isn’t it?

I’m half asleep. I don’t know. I’m… If I could… [yawns] I was up really late night—

Uh, so yeah, anyway, um, bit of a pointless ramble, but there you go. I’ve gotta just sum that up with, yeah, I agree with you, Rob.